How close can AI get to a clinical scan just from a photo? This close.

The most advanced AI model today might be able to tell you how fat you are, accurately. And this is important.

From my first crude test, GPT-4o's ability to estimate body fat percentage from photos rivals even gold-standard tools like DEXA scans.

Why this matters: As a direct measurement of adiposity (and inversely, lean body mass), BFP is a more precise and meaningful metric for health than Body Mass Index (BMI), but it’s typically inaccessible without specialized tools or less affordable.

From my training as a strength coach, I had learned that a trained eye (with expert intuition) can judge BFP roughly as accurately as other methods. I figured this might equally apply to ChatGPT with its ability to analyze images.

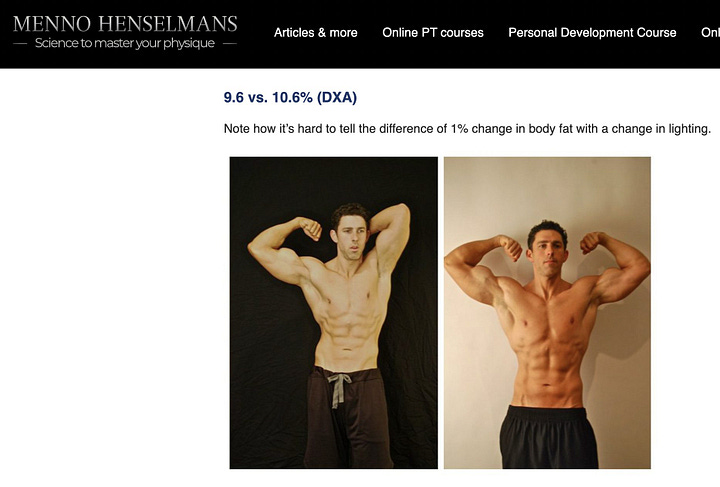

To test this, I fed the images from Menno Henselmans' "Visual Guides to Body Fat Percentage" (male and female) into ChatGPT and compared its estimates to the original numbers.

The results are in:

Men: median signed error: +0.8%, median absolute error: 2.4%

Women: median signed error: +3.5%, median absolute error: 5.7% (some underestimation in the higher percentages for women, but BFP is in general harder to judge for females)

With expensive methods like DEXA scans having an error around 2%, this is pretty good. And GPT-4o always correctly placed each case into the right rough category, from “bodybuilding stage-ready” to “obese II.”

While not a medical diagnosis (!), a photo and ChatGPT might give you a decent starting point for understanding and improving your health. BMI is outdated. DEXA is expensive. ChatGPT might be the new middle ground.

This is very interesting!

I wonder whether these photos were used for training the model. If that happened and the corresponding BFP sits close to the pictures, the model would probably make the association. Well, if we ignore the privacy questions, one probably could run the same tests with its own photos.

This is great. Did you use an elaborate prompt?